Use Amazon Sagemaker to train models with incorrect economic costs

Many companies work on machine learning (ML) to improve their customers and business results.For this reason, the pattern is identified to identify the pattern by utilizing the power of the ML model built based on "big data".Next, you can identify appropriate approaches and predict possible results based on new instances.However, since the ML model is an approximate in the real world, it is possible that some of these predictions are incorrect.

In some applications, the impact of all types of predictions is actually equivalent.In other applications, a certain mistake is much more expensive or more expensive than another mistake -the dollar, time, or some other units are measured.For example, if a medical estimate predicts that someone is not suffering from breast cancer and is actually affected (false negative mistakes), it will be much larger than the opposite mistake.If you can fully reduce the fake negative mistakes, you may be able to allow more false positive errors.

このブログ記事では、トレードオフの透明性を高めながら、望ましくない間違いを減らすという目標で、間違いのコストが不均等であるアプリケーションに対処します。異なる種類の誤分類のコストが非常に異なるバイナリ分類問題について、Amazon SageMaker のモデルをトレーニングする方法を紹介します。このトレードオフを調べるために、非対称の誤分類コストを組み込んだカスタム損失関数 (モデルがどの程度うまく予測を行っているかを評価するメトリクス) を書く方法を示します。そして、その損失関数を使って、Amazon SageMaker Build Your Own Model をトレーニングする方法を示します。さらに、モデルによる間違いの評価方法と、異なる相対コストでトレーニングされたモデルを比較する方法を示し、全体として最良の経済的成果を持つモデルを特定できるようにします。

The advantage of this approach is that you can explicitly link the results of the ML model, mistakes and business framework for decision -making.In this approach, business must explicitly present cost matrix based on specific actions to be dealt with.By doing so, the business can evaluate the economic consequences of the model forecast based on the overall process, predictions, and the costs related to them.This evaluation process does not simply evaluate the classification result of the model.This approach can promote challenging discussions in business and clarify various implicit decisions and evaluations for open discussions and agreements.

Overview of the background and solution

モデルのトレーニングは常に間違いを最小限に抑えることを目指していますが、ほとんどのモデルは、すべてのタイプの間違いが等しいと想定してトレーニングされています。しかし、種類が異なる間違いのコストが等しくないことが分かったらどうなるでしょうか? たとえば、UCI の乳癌診断データセットでトレーニングされたサンプルモデルを取り上げましょう。1 明らかに、偽陽性の予測 (乳癌ではないのに、乳癌であると予測する) は、偽陰性の予測 (乳癌であるのに、乳癌でないと予測する) とは非常に異なる結果をもたらすでしょう。最初のケースでは、追加スクリーニングが行われます。2 番目のケースでは、発見される前に癌が進行してしまう可能性があります。こうした結果を定量化するために、しばしば結果を相対コストの観点から議論し、トレードオフが可能になります。偽陰性や偽陽性の予測の正確なコストがどうあるべきかを議論することはできますが、少なくともすべてが同じではないことに全員が同意すると確信しています – ML モデルは一般にすべてが同じであるかのようにトレーニングされますが。

カスタムのコスト関数を使用してモデルを評価し、モデルが行っている間違いの経済的影響を確認することができます (効用解析)。Elkan2 は、モデルの結果にコスト関数を適用することで、標準的なベイジアンおよび意思決定木の学習方法で使用される場合の不均衡なサンプルを補正することができると示しました (例: より少ない債務不履行、大量の債務返済のサンプル)。また、カスタム関数を使用して、この同じ補正を実行することもできます。

モデルでは、カスタムの損失関数を使用して、トレーニング中にさまざまなタイプの間違いのコストをモデルに提供することで、コストの差異を反映した方法でモデルに予測を「シフト」させることもできます。たとえば、前述の乳癌の例では、モデルが行う偽陰性の間違いを少なくしたいと考えており、その目的を達成するために偽陽性をより多く受け入れる意思があります。さらに言うなら、偽陰性を少なくするためには、いくつかの「正しい」予測をあきらめても構わないかもしれません。少なくとも、ここではトレードオフを理解したいと考えます。この例では、医療業界のコストを使用します。3,4

In addition, I would like to understand that the prediction of the model is almost predicted as "almost".For example, in a binary model, the score is cut off to classify the score as "True" or "False" (example, 0..Use 5).How many cases are actually close to cut -off?The score is 0.Since it was 499999, was the fake negative classified so?These details are not found in the normal expression of confusion or AUC evaluation.In order to work on these questions, we have developed a novel and graphical expression of model predictions that can check these details without relying on a specific threshold.

実際に、特定の種類の間違いを回避するようにトレーニングされたモデルが、間違いの差別化に特化し始める可能性が高いケースがあります。街路から見える標識の誤認識がすべて同じであると信じるようにトレーニングされたニューラルネットワークを想像してみてください。5それでは、一時停止標識を速度制限 45 mph の標識として誤って認識することは、2 つの制限速度標識を混同するよりもはるかに悪い間違いであるというトレーニングを受けたニューラルネットワークを想像してみましょう。ニューラルネットワークが異なる特徴を認識し始めると予想することは合理的です。これは有望な研究の方向であると確信しています。

Build and host a model using Amazon Sagemaker.Amazon Sagemaker is a full -managed platform that allows developers and data scientists to easily build, train, and deploy machine learning models of all sizes in a short period of time.Create and analyze the model in the Jupyter notebook hosted by the Amazon Sagemaker notebook instance, build and deploy online prediction endpoints using the Bring Your Own Model function.

The term "cost function" and "loss function" are often used as exchanges, but this article distinguish them and shows the following examples.

This distinction can further elephant the behavior of the model and reflect the differences in the effects of different components.This model uses the same cost set (QALY, quality adjusted useful life, QALY) for both functions.You can do it.

This problem is divided into the following three parts.

We have built our own model and do not use Amazon Sagemaker built -in algorithm.In other words, as long as it is packaged into a Docker container by the image of a container that can be used by Amazon Elastic Container Registry (Amazon ECR), it means that it can be used to train an Amazon Sagemaker custom model.For more information about how to train custom models with Amazon Sagemaker, see this article or a variety of available sample notebooks.

setting

To set the environment necessary to execute this example with your own AWS account, first set the Amazon Sagemaker instance in the previously published blog post, set the Amazon Sagemaker instance, add the Amazonec2ContainerRegistryFullaccess policy to the policy.increase.Next, open the terminal as shown in Step 2 and GitHub repository (https: // gitHub).COM/AWS-SAMPLES/Amazon-Sagemaker-Custom-Loss-Function) is duplicated to Amazon Sagemaker notebook instance.

This repository contains a directory named "Container", which has all the components required to build and use a Docker image of the algorithms that are executed in this blog post.The details of each component are described in this Amazon Sagemaker sample bookbook.For the purpose here, there are two files, including all the information for the most relevant and all information to execute the workload.

After that, the notebook imports library, creates helper functions, imports of breast cancer dataset, standardizes, and exports training and test sets to Amazon S3 for later use in Amazon Sagemaker training.

Here, we build a loss function that gives a weight different from fake negative mistakes into false positive mistakes.To do this, you will build a binary classification molecule with Keras and use the Keras function that corresponds to the user -defined loss function.

In order to build a loss function in Keras, define the Python function that returns the scalar value, using the model prediction and the basic truth as an argument.This custom function enters costs related to fake negative mistakes and fake positive mistakes (fp_cost).Note that internally, the loss function needs to be performed using the Keras backend function.

The following function defines a single prediction loss as a difference between the truth class, which is the basis of the prediction, and the forecast value that is weighted at the cost of observed values from that class.。Total losses are an unreasonable average of all of these losses.This is a relatively simple loss function, but based on this foundation, it can be used to build a more complex, specific advantage and cost structure and train models.

def custom_loss_wrapper(fn_cost=1, fp_cost=1): def custom_loss(y_true, y_pred, fn_cost=fn_cost, fp_cost=fp_cost):h = K.ones_like(y_pred)fn_value = fn_cost * hfp_value = fp_cost * hweighted_values = y_true * K.abs(1-y_pred)*fn_value + (1-y_true) * K.abs(y_pred)*fp_valueloss = K.mean(weighted_values)return loss return custom_lossHere, since we use Amazon Sagemaker to train custom models, all code construction and training are in the Docker container image stored in the Amazon ECR.The code shown here is an example of the code contained in the Docker container image.

The actual model code (and custom loss functions that mirror the above -mentioned copies), and all files required to create a Docker container image and push to Amazon ECR are in the repository associated with this blog post.

Build and train three models, so you can compare the predictions of various models using Keras built -in loss and custom loss functions.Use a binary classification model that predicts the probability that the tumor is malignant.

The three models are as follows.

最後のモデルの損失関数で使用したコストは、医学文献に基づいています。3、4 スクリーニングのコストは、QALY で測定されます。QALY が 1 であるとは、完全に健康な 1 年間の生活 (1 年間 × 1.It is defined as 0 health).For example, a person is half a health condition, that is, 0 of the complete health..If it is 5, the personal life of the individual is 0.5 QALY (1 year x 0.It becomes equal to 5 health).Her personal 2 years of life is 1 QALY (2 years x 0).It is equivalent to 5 health).

| 結果 | QALY |

| 真陰性 | 0 |

| 偽陽性 | -0.01288 |

| 真陽性 | -0.3528 |

| 偽陰性 | -2.52 |

Here, the true negative results are evaluated as the baseline of cost.In other words, all other costs in the table are measured by relatives with patients whose tests are negative, not breast cancer.Women with breast cancer and negative tests are QALY for the baseline 2.52 Lost, not breast cancer, but the test is positive for women 0 to the baseline.0128767 (or about 4.You will lose 7 days).QALY's estimated economic value is 100,000 USD.Thus, these values can be converted into dollar costs by multiplying the cost in QALY to the cost of 100,000.From these values, fake negative mistakes are about 200 times more expensive than fake positive mistakes.See the medical literature at the beginning for details on such costs.

The value of 5 in the middle model is selected for the purpose of the demonstration.

If you get these costs, you can estimate the model.Estimating the parameters of the Keras model is a process consisting of the following three steps:

First, define the model structure.In this case, the model consists of a single node in a single layer.In other words, a single high -density layer of a single unit is added for each model below, and the linear combination of the feature is taken and the linear combination is passed to the sigmoid function that outputs the value between 0 and 1.。The actual version of the code is in the Docker container, but is shown here for the purpose of the explanation.Later steps present a relative weight.

#「組み込み」モデルは、Keras の組み込みバイナリクロスエントロピー損失関数でトレーニングされます。model_builtin = Sequential()model_builtin.add(Dense(units=num_classes, input_dim=input_dim,activation='sigmoid'))#「カスタム」モデルは、偽陰性を偽陽性より 5 倍重く重み付けする #カスタム損失関数でトレーニングされます。model_five = Sequential()model_five.add(Dense(units=num_classes, input_dim=input_dim,activation='sigmoid'))#「医療」モデルは、医学文献から得られた偽陰性および偽陽性の重み付けを割り当てる #カスタム損失関数でトレーニングされます。model_medical = Sequential()model_medical.add(Dense(units=num_classes, input_dim=input_dim,activation='sigmoid'))Next, let's compile the model.Compiling a model means configuring a learning process.You need to specify the optimization algorithm and loss function used to train the model.

This is a step that incorporates the custom loss function and the relative weight into the model training process.

#組み込みの損失関数を使用するトレーニングmodel_builtin.compile(loss='binary_crossentropy', optimizer='sgd', metrics=['accuracy'])#偽陰性を偽陽性の 5 倍に加重したカスタム損失機能を使用するトレーニングcustom_loss_five = custom_loss_wrapper(fn_cost=5, fp_cost=1)model_five.compile(loss=custom_loss_five, optimizer='sgd', metrics=['accuracy'])#偽陰性を偽陽性の 200 倍に加重したカスタム損失機能を使用するトレーニングcustom_loss_medical = custom_loss_wrapper(fn_cost=200, fp_cost=1)model_medical.compile(loss=custom_loss_medical, optimizer='sgd', metrics=['accuracy'])You are now ready to train the model.To do this, call the Fit method and specify the training data, the number of epochs, and the batch size.The code in this step is the same when using either embedded or custom loss functions.

model_builtin.fit(train_x, train_y, epochs=50, batch_size=32, verbose=0)model_five.fit(train_x, train_y, epochs=50, batch_size=32, verbose=0)model_medical.fit(train_x, train_y, epochs=50, batch_size=32, verbose=0)Building a Docker image

次に、カスタム損失関数とモデルコードを含む Docker イメージを構築し、イメージを Amazon Elastic Container Registry (ECR) にプッシュするシェルスクリプト (build_and_push.sh) を実行します。このノートブックの先頭で定義されている 「image_name」は、このイメージを含む ECR のリポジトリに割り当てられる名前です。

As mentioned above, we package the model definition and training code of the Docker container, estimate the model parameters using the Amazon Sagemaker's Bring-Your-Own-Model training function, and execute the actual training of binary classifications.。

The following code blocks train three types of classifications.

まず、Built -in loss function、つまり Keras のバイナリクロスエントロピー損失関数の Amazon SageMaker トレーニングジョブを作成して実行します。loss_function_typeセットをbuiltinに渡すと、Amazon SageMaker は、Keras のバイナリクロスエントロピー損失関数を使用することを認識します。次に、カスタムの 5:1 損失関数、つまり偽陰性が偽陽性より 5 倍高価であるカスタム損失の Amazon SageMaker トレーニングジョブを作成して実行します。loss_function_typeをカスタムに、fn_costを 5 に、fp_costを 1 に、それぞれsettingすると、Amazon SageMaker は、指定された誤分類コストでカスタム損失関数を使用することを認識します。

#モデルをトレーニングする損失関数のタイプを指定します。カスタムの損失関数を使用する場合は、#偽陰性と偽陽性のコストを指定します。hyperparameters_five = {"loss_function_type": "custom","fn_cost": 5,"fp_cost": 1}model_five = sage.estimator.Estimator(image_name=image,role=role,train_instance_count=1,train_instance_type='ml.c4.2xlarge',output_path="s3://{}/{}/output".format(bucket, prefix),hyperparameters=hyperparameters_five,SageMaker_session=sess)最後に、fn_costを200に、fp_costを1にsettingして、医療モデルを同じ方法で作成、実行します。

After training the model, Amazon Sagemaker uploads the product of the trained model to the Amazon S3 bucket specified by the Output_Path parameter of the training job.Read the model product from S3, predict all three models, and compare the results.

Analysis of results

What are the characteristics that are generally wanted in a high -performance model?

Remember that the test set will be even smaller (143 instances) if this notebook is small (569 instance).For this reason, the exact distribution and prediction of the model prediction may differ for each execution due to a sampling error.Nevertheless, the execution of the model gains the following general results:

First, we will show the conventional evaluation of the model.

With these conventional evaluations, the two custom loss functions do not function as the built -in loss function (small margin).

However, accuracy is not so important in judging the quality of these models.In fact, the accuracy may be the lowest with the "best" model.This is because as long as the number of fake negatives can be sufficiently reduced, the number of false positives can be increased.

Looking at these three models of ROC curves and AUC scores, all models seem to be very similar according to these measured values.However, neither of these metrics indicates how the predicted score is distributed at [0, 1], so it cannot be determined where these predictions are concentrated.

Note that as you move these three models, the cost of fake negative is increasing.This means that the number of fake negatives may decrease in each continuous model.

What does this suggest about the values of these classification reports?It means that negative classes (benign) should have higher accuracy, and positive classes (malignancy) should have higher recalls.(Prediction = tp / (tp + fp), recall = tp / (tp + fn).)

Keep in mind that our classification is classified as benign or malignant.According to the costs reported in the medical literature quoted earlier, fake negative is much more expensive than fake positive.Therefore, we will classify all malignant tumors like that, and do not worry about increasing the prediction of false positive (up to some point).Therefore, it is more important for negative classes (benign) to be more accurate, and more focusing on high recalls for positive classes (malignancy).

Built -in loss function

| 精度 | リコール | f1-スコア | サポート | |

| 0 | 0.95 | 0.99 | 0.97 | 88 |

| 1 | 0.98 | 0.91 | 0.94 | 55 |

| 平均/合計 | 0.96 | 0.96 | 0.96 | 143 |

5: 1 Custom loss function

| 精度 | リコール | f1-スコア | サポート | |

| 0 | 0.96 | 0.93 | 0.95 | 88 |

| 1 | 0.90 | 0.95 | 0.92 | 55 |

| 平均/合計 | 0.94 | 0.94 | 0.94 | 143 |

Medical custom loss function

| 精度 | リコール | f1-スコア | サポート | |

| 0 | 0.99 | 0.77 | 0.87 | 88 |

| 1 | 0.73 | 0.98 | 0.84 | 55 |

| 平均/合計 | 0.89 | 0.85 | 0.86 | 143 |

These classification reports indicate that we have achieved our goals.In other words, medical models are benign, the highest accuracy, malignant and recall.

What this means is that when using a medical model, it is the most likely to accidentally classify malignant tumors as benign, and are most likely to identify all malignant tumors as malignant tumors.

If you look at these reports, you can see that despite the lowest F1 score, the average accuracy and recall, the medical model is the best in these three models.

A better tool is a confused queue to understand mistakes.

Our goal is to reduce the number of fake negotiations, so the best model is the best model with the lowest fake negative unless the fake positive increase is excessive.

Moving these three confusion is increasing the cost of false negative compared to false positive.I hope that the number of fake negative will decrease and the number of false positives will increase.However, since the model is trained so that it is weighted different from the past, the number of true positive and true negative may also shift.

Built -in loss function

Predicted negative | Expected positive | |

| 実際の陰性 | 85 | 3 |

| 実際の陽性 | 4 | 51 |

5: 1 Custom loss function

Predicted negative | Expected positive | |

| 実際の陰性 | 83 | 5 |

| 実際の陽性 | 3 | 52 |

Medical custom loss function

Predicted negative | Expected positive | |

| 実際の陰性 | 66 | 22 |

| 実際の陽性 | 1 | 54 |

From this result, it can be seen that by modifying the value of the loss function provided when training the model, the balance between the mistakes categories can be shifted.Using a different weight on relative costs has a significant impact on mistakes, and other predictions move in the same way.An interesting direction for future research is to explore the characteristics that are identified by the model to support these differences in predictions.

This gives a powerful means of influencing the model based on the moral, ethical, and economic impact of the decision that has been made to the relative weight of various mistakes.

A specific observation result is classified as positive or negative by comparing the score with the threshold.Intuitively, the more the score is from the selected threshold, the higher the probability of the correct prediction (assuming that the threshold has been successfully selected).

Comparing the model prediction and the threshold used to divide the class, the value is very close to the threshold.Extremely, the difference between the "true" or "fake" can be smaller than the two different reading errors in the input sensor or the measurement.Alternatively, it can even be smaller than the rounding error of the floating point library.In the worst case, most of the observation scores may concentrate very close to the threshold.These "close confusion" is not displayed in the confusion queue or the previous F1 score or ROC curve.

Intuitively, it is desirable to keep most of the score away from the threshold, or conversely, identify the threshold based on the gap in the distribution of the score.(For example, in map production, Jenks natural classification is frequently used to deal with the same problem.) The following graphs are tools that check the relationship between score and threshold.

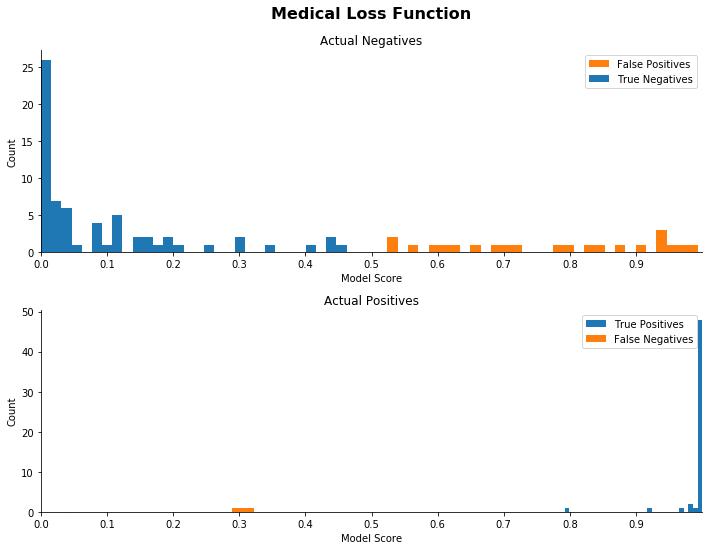

Each set of the following distribution diagram indicates the actual score of each sample in the confusion line.In each set, the histogram at the top plots the distribution of all actual negative, that is, the predictive score of benign tumors (the top row of the confusion queue).The bottom histogram plots the actual positive predictive score (downside).

The observed values classified correctly in each plot are displayed in blue, and the observed observed values are displayed in orange.0 used in other functions in this notebook.The 5 threshold is used to color the plot.However, the selection of this threshold does not affect the actual score or plot shape or level, but only affects coloring.You can also select another threshold, and the results of this section are on hold.

In the figure below, the "good" distribution is a distribution that is grouped around the point between 0 and 1.More specifically, the "good" set of the histogram has almost no false negative, the actual positive is almost concentrated around 1, and there is almost no orange point.I would like to make sure that the actual negative is concentrated around 0, but in this use case, as long as the actual positive prediction is concentrated around 1 and the number of fake negatives is small, the positive is small.There is an intention to accept the spread of predictions with support.

From these plots, you can see that increasing the ratio shifts the distribution.As the ratio of mistakes increases, the model pushes many samples to the extremes, making it much more identical.

You can also see that there is almost no score close to cut -off in the actual positive.This model demonstrates the improvement of "certainty" in positive classification.

Here, we calculate the expected value (economic value) of each of the three classification models.The expected value is a US dollar indicating the probability of weight loss, which is expected to be affected when an individual patient has a specific diagnostic test.The highest expected diagnostic test is considered "best" under this metrics.The expected value is displayed in the US dollar.

For the explanation of QALY and dollar value related to the test results defined in the cell below, see the scaling costs in front of this blog post.

This section reflects all the value of all four possible test results -true, positive, and positive and false positives.

| 期待値 | |

| 組み込み | -$19,658.34 |

| 5:1 | -$18,160.83 |

| 医療 | -$15,282.86 |

Binary classifications trained with custom loss functions based on the cost reported in medical literature are the most costly costs.

Note that we used QALY values to train the model and use it to evaluate economic costs, but do not need to use the same value.This is the second most powerful way to affect, understand, and evaluate the model.

Now, we have demonstrated how to train classifications using custom loss functions and demonstrate the results, so try the various relative values of FN and FP at various costs and check the effects.

summary

The example of this blog post shows how to change the balance between FN mistakes and FP mistakes using custom loss functions.It has shown that it can affect the balance, apart from the cost of different types of treatment plans applied to each prediction set.

There are several ways to expand this work.The following is a promising means:

Here, we have shown an approach that applies to binary classification problems, but can be used as a multi -class classification problem.It is more difficult to design input cost matrix that accurately (or appropriately) the cost of various types of mistakes and misconduct.For example, identifying pause as a 45 mph speed restriction sign is probably not the same as the opposite cost or result.However, models trained with this understanding may be more overall economic value than the accuracy, recall, F1 score, and AUC simply maximized.

This blog post shows the effect of using custom loss functions to express the true effects of various types of mistakes.The custom loss function allows you to select the relative balance of the type of mistake made by the model and evaluate the economic impact of changing the balance.By visualizing the distribution of the obtained score, you can evaluate the ability to identify the model.You can also evaluate the cost and trade -off of different predictions and different approaches for the mistakes.This combination provides a powerful new tool that links machine learning to business results, improving the transparency of trading off.

References

Veronika Megler (PHD) is a senior consultant for AWS professional services.She helps her to solve new problems by applying innovative big data, AI and ML technologies and more efficiently and effectively on new problems.

Scott Gregoire is a data scientist for AWS Professional Services.He has acquired a Ph.D. in the University of Texas Austin and advised clients in the fields from international finance to retail.He is currently developing an innovative machine learning solution in AWS in cooperation with customers.

notebook-laptop

notebook-laptop