Gluon on Amazon Sagemaker text classification by gluon and AWS Batch

Our customer had problems.The category of handling claims by manual work was a bottleneck.These claims are based on text fields that explain the details of the event short.The example of the text is as follows."In recent years, in Arizona, where the weather is irregular, a reactor with plutonium as a fuel has been heated on a hot day. Damage due to high temperatures has reached the fluctuation capacitor. It cannot be restored without replacement."If you classify this claim, it will be a "fire" or something.

The person in charge of the claim section usually reads a mountain -like warranty claim, which usually has thousands of cases, and is appropriate to manually manually work to confirm that the submit has correctly classified complaints.It must be classified into a warranty category.

The company hoped to speed up this pipeline to reduce the time it takes to solve the claim as much as possible.This is a central requirement for maintaining customer satisfaction.The company had a large and wide database about past warranty claims, so it was logical to look at the teachers with teachers.

In this blog, specific textbooks that help speed up customer workflows by filling the groove between a claim team that is not strong in technology and a more paying attention to solutions.This section describes the outline of the solution that analyzes the emotions.In this example scenario, we build an application based on AWS Lambda to process a large number of text batches using the elastic resources of AWS BATCH after training our classification models with Mxnet's Gluon and Amazon Sagemaker.I will do it.

Before you start, if you are familiar with Python, AWS command line interface (CLI), and AWS SDK for Python Boto3, you will get enough benefits from this blog.

For this blog demo, I will use the subset of IMDB Large Movie Review Dataset.This is already divided into tests and training sets.The following are the textbooks of the comma separation and the emotional classification of the text.

Emotions: 1, Text: "You've ever been to sports and do your best, and as a result, you have an important role in a short time, and have a chance to become a hero or champion.Did you notice ... Did you have failed after all? Many of us must have experienced such a moment in life. This is "Touch down to tomorrow" (to tomorrow "(The original title is “The Best of Times.") The background of the movie. In this story, the middle -aged bank clerk Jack Dundee (Robin Williams) had a deep melancholy due to a mistake in the football game many years ago., I decided to start the game again. So he had to persuade Reno HighTower (Kurt Russell), which was once a great football quarterback, to come back. RENO is a difficult life.I don't want to give up on things, support my heart, and not to rewrite the glory of the past that I had been forgotten with the years. Both are just facing the fact that years have passed.So, even in marriage, I was in a muddy swamp, so I needed to do something. It's not easy because Jack's father -in -law (Donald Moffat) puts something and reminds him of his mistakes.Don't give up and try to cancel your biggest mistakes in your life. It's the best entertainment for those who want to remember your youth. "

The data file of this demo is Test.CSV and Train.It is CSV.Note: The dataset can be included in the GitHub repository or a public Amazon S3 bucket.Either way, please use those who work well]

First, create all the static pieces of the architecture.Security policy, Amazon S3 bucket, computing environment and job cue, AWS Lambda function and AWS Lambda trigger.Then place the code for training and prediction in the Docker container and upload it to Amazon Elastic Container Registry (Amazon ECR).Once you have created a work container, you can use Amazon Sagemaker to train classification models in a short time.

Uploading the data to the predictive bucket will make the Predict Job Lambda function for the Predicted Job Lambda function just uploaded to Amazon S3 for the data that has just been uploaded to Amazon S3.This job uses a training parameter from the Amazon Sagemaker task to predict on event source data.

Make sure you have a Bash compatible computer, and that Git, Docker and AWS CLI are installed.

If you haven't installed sageMaker yet, install it with the following command:

pip install sagemakerwget https://s3.Amazonaws.com/aws-ml-blog/artifacts/text-classification-gluon/gluon-sagemaker-batch-blog.zip && unzip gluon-sagemaker-batch-blog.zip

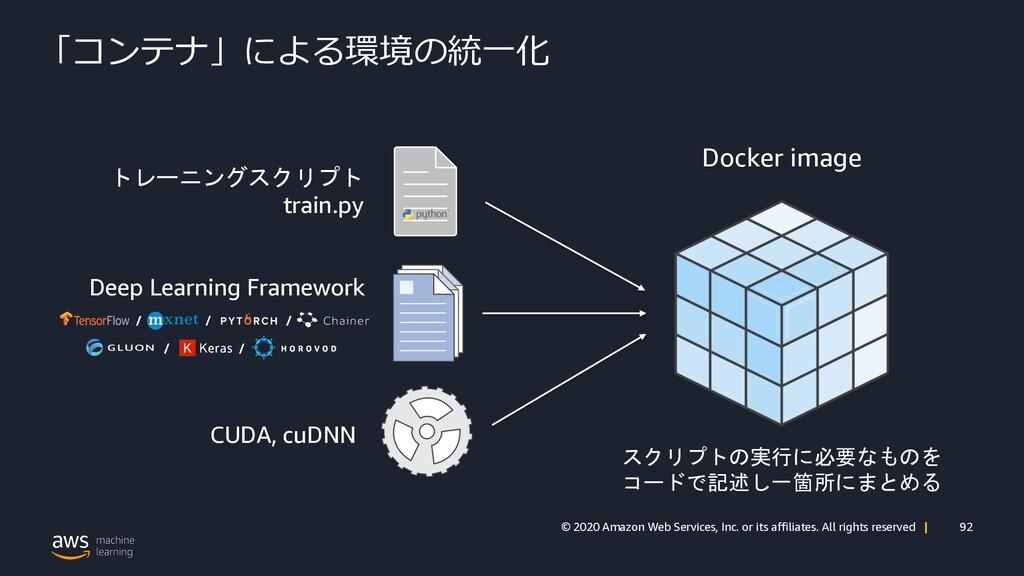

AWS Batch へのカスタムのタイインがあるため、ビルド済みのタイインを使用する代わりに、顧客の Amazon SageMaker イメージを構築することにします。以前のブログ、 AWS Batch上のディープラーニングでしたように、Docker を使用して、Amazon SageMaker と私たちのコンピューティング環境が活用するイメージを作成します。私たちの Dockerfile は、いくつかの追加パッケージとともに、mxnet/python:gpu Docker リポジトリ上で構築されます。注意するべきインポート行は 2 番目の RUN コマンドです。ここでは spacy パッケージを使用してトレーニング済みの語句埋め込み機能をダウンロードし、トレーニングをスピードアップします。トレーニングと予測のジョブを管理するために、次の 3 つのスクリプトを追加します。train(SageMaker が利用する python 実行ファイル)、predict.py、およびutils.pyです。utils.pyは、様々なファイル転送を管理し、Gluon 内で言語モデルを定義し、受け取るテキストをエンコードするための構造を作成します。。trainファイルはトレーニングデータを処理し、データに基づいて言語モデルをトレーニングし、モデルのパラメーターとテキストエンコーダーを Amazon S3 にアップロードします。。predict.pyスクリプトは、以前に作成されたそれぞれのパラメーターをダウンロードし、言語モデルの重み付けをロードし、分析対象であるファイル (test.csvファイル) 上での予測を行います。

DockerfileFROM mxnet/python:gpuRUN pip2 install numpy scipy sklearn pandas awscli boto3 spacy -URUN python2 -m spacy download en_core_web_md# add these files for batch predictionADD mxnet_model/utils.py /usr/local/bin/utils.pyADD mxnet_model/predict.py /usr/local/bin/predict.pyADD mxnet_model/train /usr/local/bin/train.pyENV PYTHONUNBUFFERED=TRUEENV PYTHONDONTWRITEBYTECODE=TRUEENV PATH="/opt/program:${PATH}"COPY mxnet_model /opt/programWORKDIR /opt/programUSER root私たちのtrainファイルがまだ実行可能でない場合のことを考えて (実行可能になっているはずですが)、用心のため、次のコマンドを実行します。

chmod +x trainTo push the Docker image to Amazon ECR, execute the following command:

cd container && build_and_push.sh languagemodel && cd ..You will need an Amazon Repository URI with the image you just created.Paste the URI to any text editor so that you do not forget it.(If you really forget the Repository URI, you can find it under the Amazon ECS console [Repositories]).It is {Account-ID}.DKR.ECR.{Region}.Amazonaws.It is a format like Com/LanguageModel.

Here, the AWS Lambda trigger function is assigned to a specific Amazon S3 bucket.If you put a file in the bucket, it will be a trigger to a specific Lambda function.In our example, when the data is put in the Amazon S3 bucket, the trigger activates the Lambda function, which is now a job trigger, and the job predicts the data.

Before you create an Amazon S3 bucket and assign a trigger, you need to upload the code of the Lambda function to the selected Amazon S3 bucket.You need to choose the name of the Lambda bucket.Here, the name "mxnet-lambda" is called.(This name will be required later, so please copy it and put the URI information as a pace.)When creating a lambda function, it.Use the ZIP file to the base of the construction (this file also requires a storage location, for example, an S3 bucket).first.Uploading a zip file to Amazon S3 can prevent dependency issues in the AWS CloudFormation stack.

To do this, execute the following command:

cd lambda-function && zip lambda_function.zip lambda_function.py # mxnet-lambda is the name of the bucket we will create# mxnet_batch_lambda/lambda_function.zip is the name of the key# 1. create bucket with a unique name - I used mxnet-lambdaaws s3api create-bucket --bucket mxnet-lambda --region us-east-1# 2. copy lambda_function.zip to our new bucketaws s3 cp lambda_function.zip s3://mxnet-lambda/mxnet_batch_lambda/lambda_function.zipcd .. && cd ..As a similar dependent problem may occur, the actual trigger will not be created up to step 5.

In this step, we build an Amazon Virtual Private Cloud (Amazon VPC), create a necessary Amazon S3 bucket, assign the appropriate AWS Identity and Access Management (IAM) roll to AWS Cloud resources, and finally the AWS Batch Holiday.Build it.Note the following points to start this step:

Based on this information, you can use AWS CloudFormation and AWS CLI to create an architecture programming.

cd cloudformation-templates && aws cloudformation create-stack --stack-name mxnet-batch --template-body file://create.yaml --parameters ParameterKey=s3uniqueprefix,ParameterValue=MY UNIQUE NAME HERE ParameterKey=LambdaCodeBucket,ParameterValue=LAMBDA NAME FROM STEP-3 ParameterKey=IMAGE,ParameterValue=YOUR REPOSITORY URI HERE FROM STEP-2 ParameterKey=EnvironmentTag,ParameterValue=Development --capabilities CAPABILITY_NAMED_IAMGo to the CloudFormation Console on the AWS Management Console to see if the stack named Mxnet-Batch is performed successfully.When completed (10 to 20 minutes), you need to create a job cue.(Note: Job cue can be created for the first time after the computing environment is created and verified).Make sure that the current folder is CloudFormation-templates and execute the following command:

aws cloudformation create-stack --stack-name mxnet-batch-jobqueue --template-body file://jobqueue.yaml --capabilities CAPABILITY_NAMED_IAMAll you need to create an AWS Lambda trigger is the unique bucket prefix selected in Step 4.

cd lambda_triggers && bash launch_triggers.sh {MY_UNIQUE_PREFIX} && cd ..This script guarantees that the Lambda function is called when a new object is placed in the predicted bucket.

To properly train the language model defined in the training script, you must set the training settings required by the model.This setting (located in the config folder) contains the number of training and architecture and optimization -specific information.This file is uploaded to the "My-Unique-Model-Config" bucket defined in the CloudFormation template.

The following is the training settings I used.If you want to try a different parameter, you can do that.All you need is to change the parameter ModelParamsurl, Transformerurl, and ModelConfigurl according to the unique prefix selected in Step 4.

{ "n_classes" : 2, "num_epoch" : 5, "optimizer" : "adam", "seq_len" : 500, "vocab_size" : 5000, "batch_size" : 64, "hidden_size" : 500, "embed_size" : 300, "dropout" : 0.2, "n_layers" : 1, "bidirectional" : true, "train_log_interval" : 10, "val_log_interval" : 5, "learning_rate" : 0.001, "use_spacy_pretrained" : true, "save_name" : "model.params", "MODELPARAMSURL" : "batch-mxnet-demo-model-paramameters", "TRANSFORMERURL" : "batch-mxnet-demo-text-transformer", "MODELCONFIGURL" : "batch-mxnet-demo-config-bucket", "epoch_factor" : 4, "power_decay" : true, "cnn" : true, "wd" : 0.0002, "kernel_size" : 8 }The description of the settings is as follows.

N_classes is the total number of classes predicted by the language model.IMDB datasets have only two classes.Public reviews and negative reviews.

NUM_EPOCH is the total number of training epochs that the language model receives training.If the epoch is 1, the model will learn only once the entire training set.

Optimizer is the learning algorithm used.For available options, http: // mxnet.Incubator.apache.Org/API/Python/Optimization/Optimization.See HTML.

Seq_len means the sequence length.This parameter is the maximum number of words in a specific sentence.If the sentence is shorter than this length, it will be filled with 0.If the sentence is long, it will be cut down.This is used to encode training data and test data.Note: This parameter cannot be reset after training.This is because the model is built as unique to this parameter.

VOCAB_SIZE specifies how to learn from the most commonly used words to the number of words in the ranking.The word that is rarely used, the word below the lower rank is simply ignored.This idea is that we have restricted the vocabulary in the n, and there is nothing else.If this parameter is reduced, the accuracy will be sacrificed, but the model will be faster.However, if it is too small, the result will be meaningless.

BATCH_SIZE is a parameter that controls how many sentence training is performed within a certain amount of time.The increase or decrease of this parameter is a matter of optimization, which exceeds the range that this demonstration is.Still, if you are going to divide it into weeds, https: // www.QUORA.See the discussion on com/intuitively-how-mini-mini-batch-batch-affect-affect-affect-the performal-stochastic-gradient-descent.

The CNN parameter controls the folding network -based language model or the LSTM -based language model.

USE_SPACY_PRETRAINED determines whether to use Spicy's training word embedded to initialize the network.

EMBED_SIZE allows you to set the embedded size to use if you do not use a spicy training word embedding.The default is 300 (SPACY size).

Hidden_size controls the size of the layer in the LSTM (when the LSTM option is selected).The size selected here is unique to this problem (emotional analysis based on the IMDB set), but you should invest this figure for your own dataset.

Dropout is a normalization parameter to avoid over -comply with the model.The recommended value is 0.1 to 0.5 (Note that it cannot exceed 1,0).

N_Layers controls the number of recursion layers in LSTM (when the LSTM option is selected).Like Hidden_size, it depends on the dataset.

Bidirectional is an interesting parameter.Controls whether the recursive function in the LSTM goes in order on the sequence, but also the opposite direction.Currently it is set to true (true).

TRAIN_LOG_INTERVAL is a parameter that controls information on information on the loss and confusion of the given training batch.It means that it is displayed for each batch of N times.This parameter sets the value of this n.

VAL_LOG_INTERVAL is a parameter that controls information on loss, confusion, and verification set accuracy.It means that it is displayed for each batch of N times.This parameter sets the value of this n.

Learning_rate is a time -reduced function and controls the learning rate in optimization algorithm.Learning Rate also depends on the dataset and you should try it with your own dataset.

Save_name determines the name when saving the weight of the model in S3.

ModelParamsurl is an S3 bucket that preserves the weight of the model.

Transformerurl is an S3 bucket that stores text conversion methods.Text conversion is important.This is because the received text is restricted within the vocabulary range of the training dataset.

ModelConfigurl is an S3 bucket in which the predicted task pulls out the configuration file.

Epoch_factor is the starting point where the learning rate begins to decline.

Power_Decay determines whether the learning rate is simply damaged by the attenuation function below.The default when using this method is the current learning rate * 90.

decay = math.floor((epoch) / config['epoch_factor'])trainer.set_learning_rate(trainer.learning_rate * math.pow(0.1, decay))WD means weight decay (weight decay, L2 normalization coefficient), and in this example 0.It is set to 0002.This fixes the target function by imposing a penalty if you have a large weight.

Kernel_size controls the size of the folding window when the CNN option is selected.

Let's upload the configuration file to Amazon S3 Config-Bucket.

aws s3 cp config/config.json s3://MY-UNIQUE-PREFIX-FROM-STEP-4-config-bucket/config.jsonThere are two options to start model training.Whether to use a simple script or Jupyter Notebook.When using the Python script, enter the following command in the root directory of the code:

python train_sagemaker_model.pyThe same results as the following should be output.

In the case of the Jupyter Notebook option, enter the following command in the code root directory and launch the Jupyter Notebook app.

jupyter notebook"Train_sageMaker_model" in Notebook Dashboard.Find and click a file named IPynb.From here, you can also run the document in cells while pressing Shift-Enter, or click [Cell] in the navigation pane and select [Run All] to execute the entire notebook.can.

If the code training is completed and the Best Validation Accuracy is acceptable, it should be used with a batch processor.

Now you can upload the new content to the predictive bucket to use the batch power for data processing.To trigger the lambda function, use the following code to Test.Upload CSV to the predictive bucket.

aws s3 cp data/test.csv s3://MY-UNIQUE-PREFIX-FROM-STEP-4-predict-bucket/test.csvYou can log in to the AWS Batch console to see if the state is running or a succeeded (success).You can also click the Batch Console link to send his stdr / sdtout information to Amazon CloudWatch Logs to check the progress.

aws s3 cp s3:// MY-UNIQUE-PREFIX-FROM-STEP-4-batch-results/test_output.csvtest_output.csvLike this demonstration, using AWS systems will allow engineers to develop, test, and deploy code on a certain scale.AWS manages overhead.If you use Amazon Sagemaker to manage your training process instead of yourself, data scientists can start building and forming a highly complex system for your company.If you go further and combine Amazon Sagemaker with a large batch process of AWS Batch, you can contribute to the elimination of the bottleneck, any workflow.

MATT KRZUS is a data scientist for AWS Professional Services.He has the background of Machine Learning and Computer Vision on a low power consumption edge device.He is currently working with a team of IoT and analytics in professional services.

notebook-laptop

notebook-laptop