Simplification of machine learning using XGBOOST and Amazon Sagemaker

Machine learning is a powerful tool that enables unprecedented use cases, such as computer vision, autonomous driving cars, and natural language processing.Machine learning is a promising technology, but it is complicated to actually implement it.In this blog post, we will talk about the simple, powerful XGBOOST, a machine learning library that can be used for various use cases.There is also a step -by -step tutorial for running XGBOOST with Amazon Sagemaker sample datasets, which introduces how to build a model that predicts the possibility of credit card default.

What is XGBOOST?

XGBOOST (EXTREME Gradient Boosting) is a software that implements algorithms using gradient boosting trees using efficiently open source that spread widely.A gradient boosting is a machine learning algorithm that accurately predicts the target variable by combining the estimated value of a simpler and weaker model set.By applying a gradient boosting to scalable to the determined tree model, XGBOOST can work very well in machine learning competition.In addition, various data types, relationships, and dispersion are reliably processed.You can provide many hyperparameters, a variable that can be tuned to improve the performance of the model.These flexibility allows XGBoost to solve various machine learning problems.

There are three problems that XGBOOST solve the best solution, classification, regression, and ranking.

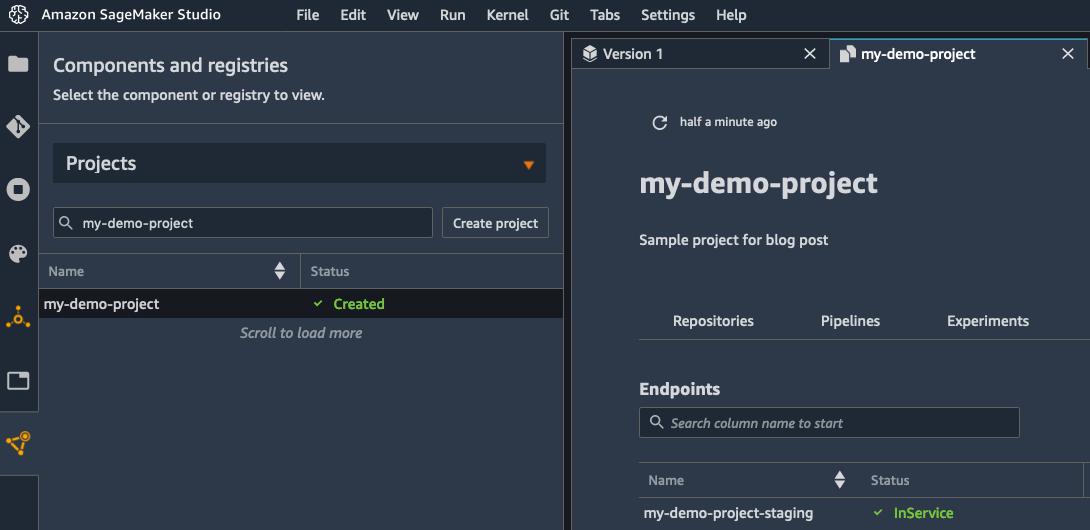

Use XGBoost on Amazon Sagemaker

XGBOOST is a downloadable open source library and can be used almost anywhere, so it can be used on Amazon SageMaker.Amazon Sagemaker is a managed training and hosting platform for machine learning workflow.Developers and data scientists can use Amazon Sagemaker to train and deploy machine learning models without managing any infrastructure.You can always bring your own training and hosting container into the Amazon Sagemaker platform, but you can also use the algorithm and library attached to Amazon Sagemaker, including XGBOOST.There are many reasons to use XGBoost on Amazon Sagemaker.

このチュートリアルでは、Amazon SageMaker を活用して、XGBoost モデルのトレーニングとデプロイを行い、クレジットカード債務不履行になる可能性があるかどうかを検出します。UCI 機械学習リポジトリにある “default of credit card clients” データセット1を使用します。

First, create an Amazon S3 bucket.Here, the training dataset and the model that Amazon Sagemaker outputs after the training job is completed.Next, create an Amazon Sagemaker notebook instance.The notebook instance provides a manager -type Jupyter notebook environment, where the datasets are downloaded, data is used to process, models, models, and predicted.

First, Amazon S3 Console https: // s3.console.AWS.Amazon.Please access com/.

Select Create Bucket.

In the window that appears, name the bucket such as "YourName-Sagemaker".

Select Create.

Make a note of the bucket created, and then Amazon Sagemaker Console https: // Console in the same region.AWS.Amazon.Move to com/ sageMaker/.

Select Create Notebook Instance.

In the window that appears, name the notebook such as "YourName-Notebook".

Select Create a New Role in the IAM roll drop -down list.

To create a new role, specify the name of the bucket in the Specific S3 Buckket text box.With this, Amazon Sagemaker has the authority to access the Amazon S3 bucket.

Select Create Role.

Select Create Notebook Instance.

When you are ready for your notebook, you can open the notebook.

Select Open.

Amazon Sagemaker notebook instance has various sample notebooks, but this tutorial authorizes new notebooks.

Select New.

Select Conda_python2 and python2.7 Open a new notebook in the environment.

The notebook is ready, so you can train and predict the model by entering the code for downloading and displaying the dataset.

Use the Jupiter notebook to enter the following code and complete the tutorial.Press the Jupyter play button or shift + enter to execute the code with the selected notebook cell.

bucket = 'yourname-sagemaker'prefix = 'sagemaker/xgboost_credit_risk'# Define IAM roleimport boto3import reimport pandas as pdimport numpy as npimport matplotlib.pyplot as pltimport osimport sagemakerfrom sagemaker import get_execution_rolefrom sagemaker.predictor import csv_serializerrole = get_execution_role()First, you need to set up a notebook.To do so, you define the Amazon S3 bucket you are using, import the necessary libraries, and get an Amazon Sagemaker execution roll (IAM roll) from a notebook environment that needs to access the training job.

!wget https://archive.ics.uci.edu/ml/machine-learning-databases/00350/default%20of%20credit%20card%20clients.xlsnext,.Download the dataset provided as an XLS file.

dataset = pd.read_excel('default of credit card clients.xls')pd.set_option('display.max_rows', 8)pd.set_option('display.max_columns', 15)datasetReading the dataset shows that there are 30,000 records, and each record has 23 related attributes that describe the functions related to the personal credit score indicated.The attributes are as follows.

The “Y” attribute is known as a target attribute.In other words, the attribute you want to predict with XGBOOST.Since the target attribute is binary, the model performs a binary prediction, which is also called binary classification.According to this dataset, 1 in column Y means that the person who had previously had a default, and 0 did not default in the past.

Usually, select the optimal input of the model using feature engineering.Father engineering is a repetitive process that requires experiments, creating many models and finding an input function that can get the best model performance.In this tutorial, the steps are omitted and XGBoost is trained with the features given.

dataset = dataset.drop('ID')dataset = pd.concat([dataset['Y'], dataset.drop(['Y'], axis=1)], axis=1)Amazon Sagemaker XGBOOST allows you to train with CSV or Libsvm format data.In this example, use the CSV format.It will be as follows.

To format a dataset into a CSV format, drop the "ID" column with a row number to the first column and change the "Y" column to the first column of DataFrame.

train_data, validation_data, test_data = np.split(dataset.sample(frac=1, random_state=1729), [int(0.7 * len(dataset)), int(0.9 * len(dataset))])train_data.to_csv('train.csv', header=False, index=False)validation_data.to_csv('validation.csv', header=False, index=False)Here, the datasets were divided into training, verification, and test sets.XGBOOST is trained on the training data set, uses a verification set as data, and evaluates the prediction results when training the model.After deploying the model, predict the test set.

s3_input_train = boto3.Session().resource('s3').Bucket(bucket).Object(os.path.join(prefix, 'train/train.csv')).upload_file('train.csv')s3_input_validation = boto3.Session().resource('s3').Bucket(bucket).Object(os.path.join(prefix, 'validation/validation.csv')).upload_file('validation.csv') When you're ready for the dataset, upload it to Amazon S3, write down the location so that you can use it for training.

containers = {'us-west-2': '433757028032.dkr.ecr.us-west-2.AmazonAWS.com/xgboost:latest','us-east-1': '811284229777.dkr.ecr.us-east-1.AmazonAWS.com/xgboost:latest','us-east-2': '825641698319.dkr.ecr.us-east-2.AmazonAWS.com/xgboost:latest','eu-west-1': '685385470294.dkr.ecr.eu-west-1.AmazonAWS.com/xgboost:latest'}sess = sagemaker.Session()xgb = sagemaker.estimator.Estimator(containers[boto3.Session().region_name],role, train_instance_count=1, train_instance_type='ml.m4.xlarge',output_path='s3://{}/{}/output'.format(bucket, prefix),sagemaker_session=sess)xgb.set_hyperparameters(eta=0.1,objective='binary:logistic',num_round=25)xgb.fit({'train': s3_input_train, 'validation': s3_input_validation})次のステップで XGBoost のトレーニングが始まります。最初に、Amazon SageMaker XGBoost トレーニングコンテナの場所を定義します。それから、Amazon SageMaker のエスティメーターを作成します。次の値をただ変更するだけで、train_instance_countとtrain_instance_type実行したいインスタンスのサイズと数を変更することができます。これでトレーニングをスケールアウトし分散できます。

XGBoost には、モデルのパフォーマンスを向上させるためにチューニングできる、たくさんのハイパーパラメータもあります。ここでは、最も一般的な調整をしたいくつかのハイパーパラメータの値を設定します。注意すべきは、objectiveパラメータはbinary:logisticに設定していることです。このパラメータは、XGBoost にどのような問題 ( 分類、回帰、ランク付けなど ) を解決するかを指示します。こちらのケースでは、クレジットカード債務不履行になる可能性がある人物かどうかを予測することで、バイナリ分類の問題を解決しています。

When the training is completed, the training log will be displayed.This log contains indicators such as training and verification errors, which helps evaluate model performance.Training logs are also available on Amazon Cloudwatch Logs.

xgb_predictor = xgb.deploy(initial_instance_count=1, instance_type='ml.m4.xlarge')After the model training, deploy the model to the Amazon Sagemaker hosting.It takes a few minutes to set the hosting endpoint.

xgb_predictor.content_type = 'text/csv'xgb_predictor.serializer = csv_serializerxgb_predictor.deserializer = Nonedef predict(data, rows=500): split_array = np.array_split(data, int(data.shape[0] / float(rows) + 1)) predictions = '' for array in split_array:predictions = ','.join([predictions, xgb_predictor.predict(array).decode('utf-8')]) return np.fromstring(predictions[1:], sep=',')predictions = predict(test_data.as_matrix()[:, 1:])predictions最後のステップは予測です。まず、シリアライザを設定します。これは Numpy 配列 ( テストデータのような ) にし、CSV 形式にシリアル化します。next,テストデータに入力として取り込み、データの予測を実行する予測関数を定義します。これは次の関数呼び出しxgb_predictor.predict()を通して行われます。

The output is a predicted array.The first element of the array is the probability that a person who corresponds to the data line entered at the beginning will be default.The array continues the probability of all rows in the test set.

Hosting the model, you can start predictions on which customers of the credit card holder will be defaulted.This allows you to know the credit of the customer, improving the issuance of credit and predicting future payments.

summary

XGboost is a powerful and powerful machine learning library to solve classification, regression, and ranking issues.To use XGBoost on Amazon Sagemaker, you do not need to configure and manage infrastructure, and have additional advantages such as distributed training and managed model hosting.If you have a use case that can be solved with XGBOOST, please refer to the Amazon Sagemaker sample notebook.These notebooks include examples of how to implement XGBOOST, including how algorithms can adapt to other use cases.

YASH PANT is an AWS enterprise solution architect.We support corporate customers to migrate and design cloud applications, and are currently working on machine learning workloads.

SAKSHAM SAINI is an Amazon Sagemaker software developer.He has acquired a computer engineering bachelor's degree at the Illinois University Urbana Champagne School.We are currently working on building a scalable algorithm that is highly optimized for Amazon Sagemaker.Other than work, my hobby is reading, music, and traveling.

Yijie Zhuang is AWS Sagemaker software engineer.He got a computer engineering master's degree at Duke University.I'm busy building Amazon Sagemaker's algorithms and applications.

notebook-laptop

notebook-laptop