Amazon Sagemaker Deepar prediction of driving speed violation due to algorithm

For many companies and the industry, the aspect of prediction is very important.If you move forward without a clearly defined goal, you may get serious results.Product plans, financial forecasts, and weather forecasts create scientific quotes based on hard data and important analysis.Time series predictions can disassemble the history data if there is a base line, trend, and seasonality.

Amazon Sagemaker Deepar Forecast Algorithm is a machine learning algorithm for predicting time series.This algorithm uses recurrent neural networks (RNN) to generate points and probability predictions.You can use the Deepar algorithm to predict a single value in the scalar (one -dimensional) time series or create a model at the same time in hundreds of relevant time series.You can also predict a new time series related to the series where the model is trained.

To explain the time series prediction, we use Deepar algorithm to analyze Chicago's speed violation camera datators.The dataset is Data.Hosted by GOV and managed by the US General Affairs Bureau and Technologist Transformation Service.These violations are captured by the camera system and can be used in the Chicago data portal.You can use the dataset to identify the data pattern and get meaningful insights.

The dataset includes multiple cameras and the number of violations every day.If you imagine the daily violation of the camera as a time series, you can use Deepar algorithm to simultaneously train multiple road models and predict multiple road violations.

This analysis allows the driver to identify the seasonality of data, which is the most likely to drive a driver exceeding the speed limit in a variety of time zones for one year.This allows cities to reduce operating speeds, create alternative routes, and implement prior measures to enhance safety.

This notebook code is available in the GitHub repository.

Creating Jupyter notebooks

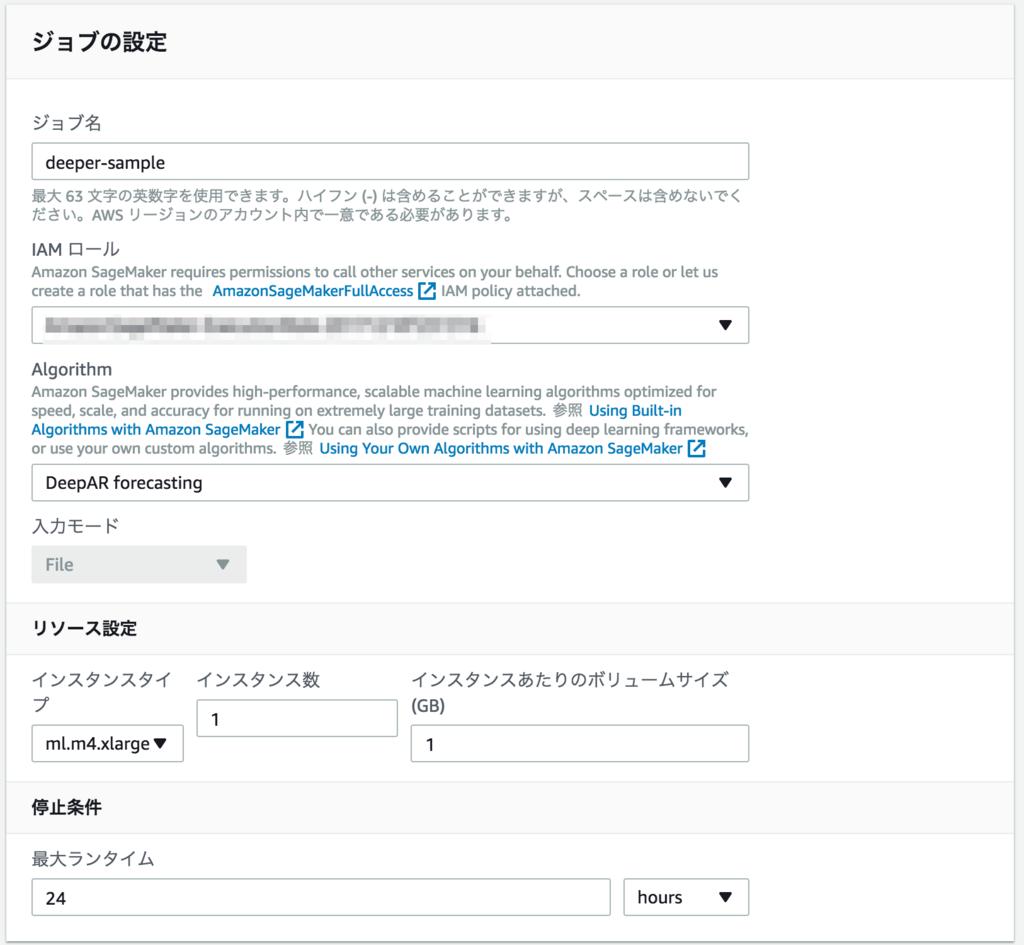

Before you start, create an Amazon Sagemaker Jupyter notebook instance.In this post, ML.m4.Use the XLARGE notebook instance and the built -in Python3 kernel.

Imported libraries, download and visualize data

Download the data to Jupyter notebook instance and upload it to the Amazon Simple Storage Service (Amazon S3) bucket.To train data, use the address, the day of the violation, and the number of violations.The following code and output indicate how to download the dataset and display a few lines and four rows.

url = 'https://data.cityofchicago.org/api/views/hhkd-xvj4/rows.csv?accessType=DOWNLOAD'# シカゴ市のサイトからデータを取得r = requests.get(url, allow_redirects=True)open(datafile, 'wb').write(r.content)# 入力ファイルを読み取り、サンプルの行/列を表示するpd.set_option('display.max_columns', 500)pd.set_option('display.max_rows', 50) df = pd.read_csv(open(datafile, 'rb'), encoding='utf-8')df[df.columns[0:3]]Visualization of data using Matplotlib

In this procedure, the day of the violation is converted from a string format to the date of the data frame, the lack of violation values are added to the camera, and the Matplotlib is used to visualize violations of various roads as a chronological order.I will make it.This helps to visualize each camera and road data as chronological order.See the following code:

df['VIOLATION_DT'] = pd.to_datetime(df['VIOLATION DATE'])df[['ADDRESS', 'VIOLATION_DT', 'VIOLATIONS']]unique_addresses = df.ADDRESS.unique()idx = pd.date_range(df.VIOLATION_DT.min(), df.VIOLATION_DT.max())number_of_addresses = len(unique_addresses)print('Unique Addresses {}'.format(number_of_addresses))print('Minimum violation date is {}, maximum violation date is {}'.format(df.VIOLATION_DT.min(),df.VIOLATION_DT.max()))violation_list = []for key in unique_addresses: temp_df = df[['VIOLATION_DT', 'VIOLATIONS']][df.ADDRESS == key] temp_df.set_index(['VIOLATION_DT'], inplace=True) temp_df.index = pd.DatetimeIndex(temp_df.index) temp_df = temp_df.reindex(idx, fill_value=0) violation_list.append(temp_df['VIOLATIONS'])plt.figure(figsize=(12,6), dpi=100, facecolor='w')for key, address in enumerate(unique_addresses): plt.plot(violation_list[key], label=address)plt.ylabel('Violations')plt.xlabel('Date')plt.title('Chicago Speed Camera Violations')plt.legend(loc='upper center', bbox_to_anchor=(0.5, -0.05), shadow=False, ncol=4)plt.show()The following graph shows all data available in the dataset as a time -series, including the number of speed violations of the Y -axis plotted to the X -axis date.

Data set split for training and evaluation

Now you can divide the data into a training set and a test set.Create a test dataset from the last 30 days to evaluate the prediction of the model.The training job does not display test data for the last 30 days.Convert the dataset from a panda series to a JSON series object, use the test dataset to check the quality of the predictive function of the trained model.The following code indicates the creation of data split, training and test datasets.

prediction_length = 30# トレーニングと検証のためにデータを分割する/差し控えるviolation_list_training = []for i in violation_list: violation_list_training.append((i[:-prediction_length]))def series_to_obj(ts, cat=None): obj = {'start': str(ts.index[0]), 'target': list(ts)} if cat:obj['cat'] = cat return objdef series_to_jsonline(ts, cat=None): return json.dumps(series_to_obj(ts, cat))encoding = 'utf-8's3filesystem = s3fs.S3FileSystem()with s3filesystem.open(train_data_path, 'wb') as fp: for ts in violation_list_training:fp.write(series_to_jsonline(ts).encode(encoding))fp.write('\n'.encode(encoding))with s3filesystem.open(test_data_path, 'wb') as fp: for ts in violation_list:fp.write(series_to_jsonline(ts).encode(encoding))fp.write('\n'.encode(encoding))Use management -type spot instances and automatic model tuning for training

Amazon Sagemaker Python SDK offers a simple API for creating an automatic model adjustment job. In this example, the cost of training is reduced using the management -type spot instance. You can minimize the training value of the model by using the two -square -average two -square troupe error (RMSE) of the test dataset. The Amazon Sagemaker tuning package HyperParametertuner class offers a simple interface that controls parallel processing of the number of training jobs to be executed to find the optimal hyper parameters. The maximum number of jobs to be executed is set to 10 and 10 parallel jobs are used. Set the numbers to higher values, enable more hyper parameters adjustments, and produce better results. The Fit method sets the maximum training time to one hour and starts the hyper parameter adjustment job. See the following code:

from sagemaker.tuner import IntegerParameter, CategoricalParameter, ContinuousParameter, HyperparameterTunerdeepar = sagemaker.estimator.Estimator(image_name,role,train_instance_count=1,train_instance_type='ml.m4.xlarge',train_use_spot_instances=True, # use spot instancestrain_max_run=3600, # max training time in secondstrain_max_wait=3600, # seconds to wait for spot instanceoutput_path='s3://{}/{}'.format(bucket, s3_output_path),sagemaker_session=sess)freq = 'D'context_length = 30deepar.set_hyperparameters(time_freq=freq,context_length=str(context_length),prediction_length=str(prediction_length))hyperparameter_ranges = {'mini_batch_size': IntegerParameter(100, 400), 'epochs': IntegerParameter(200, 400), 'num_cells': IntegerParameter(30,100), 'likelihood': CategoricalParameter(['negative-binomial', 'student-T']), 'learning_rate': ContinuousParameter(0.0001, 0.1)}objective_metric_name = 'test:RMSE'tuner = HyperparameterTuner(deepar, objective_metric_name, hyperparameter_ranges, max_jobs=10, strategy='Bayesian', objective_type='Minimize', max_parallel_jobs=10, early_stopping_type='Auto')s3_input_train = sagemaker.s3_input(s3_data='s3://{}/{}/train/'.format(bucket, prefix),content_type='json')s3_input_test = sagemaker.s3_input(s3_data='s3://{}/{}/test/'.format(bucket, prefix),content_type='json')tuner.fit({'train': s3_input_train, 'test': s3_input_test}, include_cls_metadata=False)tuner.wait()Best model deployment

Amazon Sagemaker Python SDK Tuner.Best_tuning_job API can identify the optimal tuning job.This allows you to deploy a model that minimizes the hypersparameter training purpose metric.ML is an optimal model identified by an automatic hyper parameter optimization job with a single deployment API call.m4.Deployed to the XLARGE instance.

best_tuning_job_name = tuner.best_training_job()endpoint_name = tuner.deploy(initial_instance_count=1,endpoint_name=best_tuning_job_name,instance_type='ml.m4.xlarge',wait=True)Part of the following output shows the training time for the optimal model and the claim target.

…トレーニング秒数: 674請求可能秒数: 242管理型スポットトレーニングの節約: 64.1%Spot training managed by Amazon Sagemaker reduces the cost of all 10 training jobs.The output above indicates that the most trained model of 10 training jobs has saved more than 64% of the training cost than on -demand training instances.

Infer

Next, sageMaker.Predictor.Extend helper functions related to the REALTIMEPREDICTOR class, encode the panda series to objects, extend the decoding function, and define the DeepArpredictor class in which the object is reversed into the panda series.With this method, you can implement the prediction method of the test dataset.See the following code:

class DeepARPredictor(sagemaker.Predictor.RealTimePredictor):def set_prediction_parameters(self, freq, prediction_length):""" 時間頻度と予測長のパラメータを設定します。このメソッドは、`predict` を使用する前に呼び出す **必要があります**。 パラメータ:freq -- 時間頻度を示す文字列prediction_length -- 整数、予測時点の数戻り値: なし。 """self.freq = freqself.prediction_length = prediction_lengthdef predict(self, ts, cat=None, encoding='utf-8', num_samples=100, quantiles=['0.1', '0.5', '0.9']):""" `ts` にリストされた時系列の予測をリクエストします。それぞれに(オプション) 対応するカテゴリが `cat` にリストされています。 パラメータ:ts -- `pandas.Series` オブジェクトのリスト、予測する時系列cat -- 整数のリスト (デフォルト: なし)encoding -- 文字列、リクエストに使用するエンコーディング (デフォルト: 'utf-8')num_samples -- 整数、予測時に計算するサンプル数 (デフォルト: 100)quantiles -- 計算する分位数を指定する文字列のリスト (デフォルト: ['0.1', '0.5', '0.9']) 戻り値: それぞれが予測を含む `pandas.DataFrame` オブジェクトのリスト"""prediction_times = [x.index[-1]+1 for x in ts]req = self.__encode_request(ts, cat, encoding, num_samples, quantiles)res = super(DeepARPredictor, self).predict(req)return self.__decode_response(res, prediction_times, encoding)def __encode_request(self, ts, cat, encoding, num_samples, quantiles):instances = [series_to_obj(ts[k], cat[k] if cat else None) for k in range(len(ts))]configuration = {'num_samples': num_samples, 'output_types': ['quantiles'], 'quantiles': quantiles}http_request_data = {'instances': instances, 'configuration': configuration}return json.dumps(http_request_data).encode(encoding)def __decode_response(self, response, prediction_times, encoding):response_data = json.loads(response.decode(encoding))list_of_df = []for k in range(len(prediction_times)):prediction_index = pd.DatetimeIndex(start=prediction_times[k], freq=self.freq, periods=self.prediction_length)list_of_df.append(pd.DataFrame(data=response_data['predictions'][k]['quantiles'], index=prediction_index))return list_of_dfPredictor = DeepARPredictor(endpoint=best_tuning_job_name, sagemaker_session=sagemaker_session, content_type="application/json")Visualization of prediction

In the last step, we use the predicted object to predict five road samples from the test dataset and provide graphical results for test data and prediction data.You can check the model performance by graphing test data predictions in 80% confidence intervals.See the code and graph below.

Predictor.set_prediction_parameters(freq, prediction_length)list_of_df = Predictor.predict(violation_list_training[:5])actual_data = violation_list[:5]for k in range(len(list_of_df)): plt.figure(figsize=(12,6), dpi=75, facecolor='w') plt.ylabel('Violations') plt.xlabel('Date') plt.title('Chicago Speed Camera Violations:' + unique_addresses[k]) actual_data[k][-prediction_length-context_length:].plot(label='target') p10 = list_of_df[k]['0.1'] p90 = list_of_df[k]['0.9'] plt.fill_between(p10.index, p10, p90, color='y', alpha=0.5,label='80% confidence interval') list_of_df[k]['0.5'].plot(label='prediction median')The pattern displayed above indicates that the prediction is as the target and test data within 80% reliability.It also indicates that the 1111 N Humboldt streets have risen on January 31, 2020, February 8, 2020, and February 15, 2020 (Saturday).

If you use all data points continuously to graph this data, you can check the seasonal patterns that indicate that speed violations will rose sharply in the middle of the year and in the summer.

Clean -up

After completing this tutorial, be sure to delete the predicted endpoints so that the AWS account does not charge.See the following code:

Predictor.delete_endpoint(endpoint_name)Amazon Sagemaker notebook instance also needs to be deleted.See Step 9: Cleanup for the procedure.

summary

In this post, we used Amazon Sagemaker's Deepar algorithm to train models, predict multiple addresses at various camera positions, observed the speed violation over time, and identified the seasonality.Using this data, the model can predict the rapids and violations that occur during the weekend and summer and subsequent periods.Such an analysis helps drivers to predict a street that is likely to be driven at a variety of times of one year.Cities can implement pre -measures to reduce operating speed, increase safety, and create alternative routes to reduce congestion.

If you need to predict multiple related time series in your business, you can use Deepar algorithms and solutions for this post.For more information about Deepar algorithm, see the Deepar algorithm mechanism.For the design method of algorithm, see Deepar: probable prediction using the self -regained recurrent network.

Viral Desai is AWS solution architect.We provide architecture guidelines so that customers can succeed in the cloud.In spare time, I play tennis and enjoy spending time with my family.

notebook-laptop

notebook-laptop